Alright, I’m again — time for half 2.

Within the first half, I lined how we deal with dangerous knowledge in batch processing; specifically, slicing out the dangerous knowledge, changing it, and working it once more. However this technique doesn’t work for immutable occasion streams as they’re, effectively, immutable. You’ll be able to’t reduce out and substitute dangerous knowledge such as you would in batch-processed knowledge units.

Thus, as a substitute of repairing after the actual fact, the primary approach we checked out is stopping dangerous knowledge from stepping into your system within the first place. Use schemas, assessments, and knowledge high quality constraints to make sure your techniques produce well-defined knowledge. To be honest, this technique would additionally prevent a whole lot of complications and issues in batch processing.

Prevention solves a lot of issues. However there’s nonetheless a chance that you simply’ll find yourself creating some dangerous knowledge, equivalent to a typo in a textual content string or an incorrect sum in an integer. That is the place our subsequent layer of protection within the type of event design is available in.

Occasion design performs an enormous position in your potential to repair dangerous knowledge in your occasion streams. And very like utilizing schemas and correct testing, that is one thing you’ll want to consider and plan for throughout the design of your software. Properly-designed occasions considerably ease not solely dangerous knowledge remediation points but in addition associated considerations like compliance with GDPR and CCPA.

And at last, we’ll have a look at what occurs when all different lights exit — you’ve wrecked your stream with dangerous knowledge and it’s unavoidably contaminated. Then what? Rewind, Rebuild, and Retry.

However to start out we’ll have a look at occasion design, because it provides you with a a lot better concept of how one can keep away from capturing your self within the foot from the get-go.

Fixing Unhealthy Knowledge By means of Occasion Design

Occasion design closely influences the impression of dangerous knowledge and your choices for repairing it. First, let’s have a look at State (or Truth) occasions, in distinction to Delta (or Motion) occasions.

State occasions include all the assertion of reality for a given entity (e.g., Order, Product, Buyer, Cargo). Consider state occasions precisely such as you would take into consideration rows of a desk in a relational database — every presents a complete accounting of knowledge, together with a schema, well-defined sorts, and defaults (not proven within the image for brevity’s sake).

State occasions allow event-carried state switch (ECST), which helps you to simply construct and share state throughout companies. Customers can materialize the state into their very own companies, databases, and knowledge units, relying on their very own wants and use circumstances.

Materializing is fairly easy. The patron service reads an occasion (1) after which upserts it into its personal database (2), and also you repeat the method (3 and 4) for every new occasion. Each time you learn an occasion, you’ve the choice to use enterprise logic, react to the contents, and in any other case drive enterprise logic.

Updating the information related to a Key “A” (5) leads to a brand new occasion. That occasion is then consumed and upserted (6) into the downstream shopper knowledge set, permitting the buyer to react accordingly. Notice that your shopper just isn’t obligated to retailer any knowledge that it doesn’t require — it could actually merely discard unused fields and values.

Deltas, then again, describe a change or an motion. Within the case of the Order, they describe item_added, and order_checkout, although fairly you need to anticipate many extra deltas, significantly as there are various alternative ways to create, modify, add, take away, and alter an entity.

Although I can (and do) go on and on concerning the tradeoffs of those two occasion design patterns, the essential factor for this publish is that you simply perceive the distinction between Delta and State occasions. Why? As a result of solely State occasions profit from subject compaction, which is crucial for deleting dangerous, previous, non-public, and/or delicate knowledge.

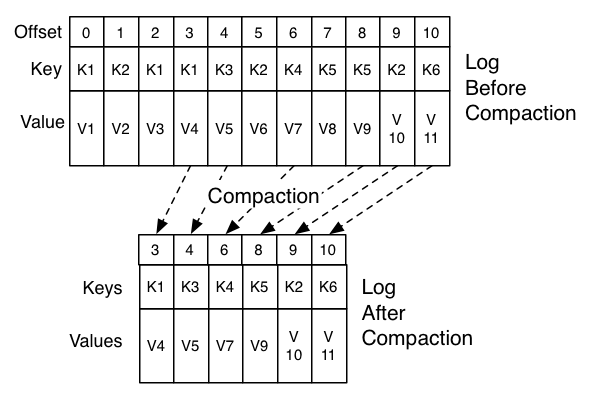

Compaction is a course of in Apache Kafka that retains the newest worth for every document key (e.g., Key = “A”, as above) and deletes older variations of that knowledge with the identical document key. Compaction allows the entire deletion of data by way of tombstones from the subject itself — all data of the identical key that come earlier than the tombstone might be deleted throughout compaction.

Other than enabling deletion by way of compaction, tombstones additionally point out to registered shoppers that the information for that key has been deleted and they need to act accordingly. For instance, they need to delete the related knowledge from their very own inner state retailer, replace any enterprise operations affected by the deletion, and emit any related occasions to different companies.

Compaction contributes to the eventual correctness of your knowledge, although your shoppers will nonetheless must take care of any incorrect side-effects from earlier incorrect knowledge. Nonetheless, this stays equivalent as should you have been writing and studying to a shared database — any choices made off the inaccurate knowledge, both via a stream or by querying a desk, should nonetheless be accounted for (and reversed if crucial). The eventual correction solely prevents future errors.

State Occasions: Repair It As soon as, Repair It Proper

It’s very easy to repair dangerous state knowledge. Simply appropriate it on the supply (e.g., the applying that created the information), and the state occasion will propagate to all registered downstream shoppers. Compaction will finally clear up the dangerous knowledge, although you’ll be able to drive compaction, too, should you can’t wait (maybe on account of safety causes).

You’ll be able to fiddle round with compaction settings to higher fit your wants, equivalent to compacting ASAP or solely compacting knowledge older than 30 days (min.compaction.lag.ms= 2592000000). Notice that lively Kafka segments can’t be compacted instantly, the phase should first be closed.

I like state occasions. They’re straightforward to make use of and map to database ideas that the overwhelming majority of builders are already acquainted with. Customers may also infer the deltas of what has modified from the final occasion (n-1) by evaluating it to their present state (n). And much more, they will examine it to the state earlier than that (n-2), earlier than that (n-3), and so forth (n-x), as long as you’re keen to maintain and retailer that knowledge in your microservice’s state retailer.

“But wait, Adam!”

I’ve heard (many) occasions earlier than.

“Shouldn’t we store as little data as possible so that we don’t waste space?”

Eh, Kinda.

Sure, you need to be cautious with how a lot knowledge you progress round and retailer, however solely after a sure level. However this isn’t the Nineteen Eighties, and also you’re not paying $339.8 per MB for disk. You’re way more more likely to be paying $0.08/GB-month for AWS EBS gp3, otherwise you’re paying $0.023/GB-month for AWS S3.

State is low-cost. Community is low-cost. Watch out about cross-AZ prices, which some writers have recognized as anti-competitive, however by and enormous, you don’t have to fret excessively about replicating knowledge by way of State occasions.

Sustaining a per-microservice state could be very low-cost nowadays, due to cloud storage companies. And because you solely must hold the state your microservices or jobs care about, you’ll be able to trim the per-consumer replication to a smaller subset usually. I’ll most likely write one other weblog concerning the bills of untimely optimization, however simply needless to say state occasions give you a ton of flexibility and allow you to hold complexity to a minimal. Embrace at this time’s low-cost compute primitives, and deal with constructing helpful purposes and knowledge merchandise as a substitute of attempting to slash 10% of an occasion’s dimension (heck — simply use compression should you haven’t already).

However now that I’ve ranted about state occasions, how do they assist us repair the dangerous knowledge? Let’s check out just a few easy examples, one utilizing a database supply, one with a subject supply, and one with an FTP supply.

State Occasions and Fixing on the Supply

Kafka Join is the commonest strategy to bootstrap occasions from a database. Updates made to a registered database’s desk rows (Create, Replace, Delete) are emitted to a Kafka subject as discrete state occasions.

You’ll be able to, for instance, hook up with a MySQL, PostgreSQL, MongoDB, or Oracle database utilizing Debezium (a change-data seize connector). Change-data occasions are state-type occasions and have each earlier than and after fields indicating the earlier than state and after state because of the modification. You will discover out extra within the official documentation, and there are many different articles written on CDC utilization on the net.

To repair the dangerous knowledge in your Kafka Join-powered subject, merely repair the information in your supply database (1). The change-data connector (CDC, 2a) takes the information from the database log, packages it into occasions, and publishes it to the compacted output subject. By default, the schema of your state sort maps on to your desk supply — so watch out should you’re going to go about migrating your tables.

Notice that this course of is precisely the identical as what you’ll do for batch-based ETL. Repair the dangerous knowledge at supply, rerun the batch import job, then upsert/merge the fixes into the touchdown desk knowledge set. That is merely the stream-based equal.

Equally, for instance, a Kafka Streams software (2) can depend on compacted state subjects (1) as its enter, figuring out that it’ll all the time get the eventual appropriate state occasion for a given document. Any occasions that it’d publish (3) can even be corrected for its personal downstream shoppers.

If the service itself receives dangerous knowledge (say a nasty schema evolution and even corrupted knowledge), it could actually log the occasion as an error, divert it to a dead-letter queue (DLQ), and proceed processing the opposite knowledge (Notice that we talked about dead-letter queues and validation again partly 1).

Lastly, take into account an FTP listing the place enterprise companions (AKA those who give us cash to promote/do work for them) drop paperwork containing details about their enterprise. Let’s say they’re dropping in details about their complete product stock in order that we will show the present inventory to the shopper. (Sure, typically that is as near occasion streaming as a accomplice is keen or in a position to get).

We’re not going to run a full-time streaming job simply idling away, ready for updates to this listing. As a substitute, after we detect a file touchdown within the bucket, we will kick off a batch-based job (AWS Lambda?), parse the information out of the .xml file, and convert it into occasions keyed on the productId representing the present stock state.

If our accomplice passes us dangerous knowledge, we’re not going to have the ability to parse it accurately with our present logic. We are able to, in fact, ask them properly to resend the right knowledge (1), however we’d additionally take the chance to research what the error is, to see if it’s an issue with our parser (2), and never their formatting. Some circumstances, equivalent to if the accomplice sends a very corrupted file, require it to be resent. In different circumstances, they might merely depart it to us knowledge engineers to repair it up on our personal.

So we establish the errors, add code updates, and new take a look at circumstances, and reprocess the information to make sure that the compacted output (3) is finally correct. It doesn’t matter if we publish duplicate occasions since they’re successfully benign (idempotent), and received’t trigger any modifications to the buyer’s state.

That’s sufficient for state occasions. By now you need to have a good suggestion of how they work. I like state occasions. They’re highly effective. They’re straightforward to repair. You’ll be able to compact them. They map properly to database tables. You’ll be able to retailer solely what you want. You’ll be able to infer the deltas from any time limit as long as you’ve saved them.

However what about deltas, the place the occasion doesn’t include state however quite describes some form of motion or transition? Buckle up.

Can I Repair Unhealthy Knowledge for Delta-Fashion Occasions?

“Now,” you may ask, “What about if I write some bad data into a delta-style event? Am I just straight out of luck?” Not fairly. However the actuality is that it’s rather a lot tougher (like, rather a lot rather a lot) to wash up delta-style occasions than it’s state-style occasions. Why?

The key impediment to fixing deltas (and another non-state occasion, like instructions) is that you’ll be able to’t compact them — no updates, no deletions. Each single delta is crucial for making certain correctness, as every new delta is in relation to the earlier delta. A nasty delta represents a become a nasty state. So what do you do if you get your self into a nasty state? You actually have two methods left:

- Undo the dangerous deltas with new deltas. It is a build-forward approach, the place we merely add new knowledge to undo the previous knowledge. (WARNING: That is very arduous to perform in apply).

- Rewind, rebuild, and retry the subject by filtering out the dangerous knowledge. Then, restore shoppers from a snapshot (or from the start of the subject) and reprocess. That is the ultimate approach for repairing dangerous knowledge, and it’s additionally essentially the most labor-intensive and costly. We’ll cowl this extra within the closing part because it technically additionally applies to state occasions.

Each choices require you to establish each single offset for every dangerous delta occasion, a job that varies in issue relying on the amount and scope of dangerous occasions. The bigger the information set and the extra delta occasions you’ve, the extra pricey it turns into — particularly when you have dangerous knowledge throughout a big keyspace.

These methods are actually about making the perfect out of a nasty state of affairs. I received’t mince phrases: Unhealthy delta occasions are very troublesome to repair with out intensive intervention!

However let’s have a look at every of those methods in flip. First up, build-forward, after which to cap off this weblog, rewind, rebuild, and retry.

Construct-Ahead: Undo Unhealthy Deltas With New Deltas

Deltas, by definition, create a good coupling between the delta occasion fashions and the enterprise logic of shopper(s). There is just one strategy to compute the right state, and an infinite quantity of the way to compute the inaccurate state. And a few incorrect states are terminal — a package deal, as soon as despatched, can’t be unsent, nor can a automobile crushed right into a dice be un-cubed.

Any new delta occasions, printed to reverse earlier dangerous deltas, should put our shoppers again to the right good state with out overshooting into one other dangerous state. However it’s very difficult to ensure that the printed corrections will repair your shopper’s derived state. You would wish to audit every shopper’s code and examine the present state of their deployed techniques to make sure that your corrections would certainly appropriate their derived state. It’s truthfully simply actually fairly messy and labor-intensive and can value rather a lot in each developer hours and alternative prices.

Nonetheless… it’s possible you’ll discover success in utilizing a delta technique if the producer and shopper are tightly coupled and underneath the management of the identical workforce. Why? Since you management totally the manufacturing, transmission, and consumption of the occasions, and it’s as much as you to not shoot your self within the foot.

Fixing Delta-Fashion Occasions Sounds Painful

Yeah, it’s. It’s one of many explanation why I advocate so strongly for state-style occasions. It’s a lot simpler to get well from dangerous knowledge, to delete data (hi there GDPR), to cut back complexity, and to make sure free coupling between domains and companies.

Deltas are popularly used as the idea of occasion sourcing, the place the deltas kind a story of all modifications which have occurred within the system. Delta-like occasions have additionally performed a task in informing different techniques of modifications however might require the events to question an API to acquire extra info. Deltas have traditionally been standard as a method of decreasing disk and community utilization, however as we noticed when discussing state occasions, these assets are fairly low-cost these days and we could be a bit extra verbose in what we put in our occasions.

General, I like to recommend avoiding deltas until you completely want them (e.g., occasion sourcing). Occasion-carried state switch and state-type occasions work extraordinarily effectively and simplify a lot about coping with dangerous knowledge, enterprise logic modifications, and schema modifications. I warning you to suppose very fastidiously about introducing deltas into your inter-service communication patterns and encourage you to solely achieve this should you personal each the producer and the buyer.

For Your Info: “Can I Just Include the State in the Delta?”

I’ve additionally been requested if we will use occasions like the next, the place there’s a delta AND some state. I name these hybrid occasions, however the actuality is that they supply ensures which might be successfully equivalent to state occasions. Hybrid occasions give your shoppers some choices as to how they retailer state and the way they react. Let’s have a look at a easy money-based instance.

Key: {accountId: 6232729}

Worth: {debitAmount: 100, newTotal: 300}

On this instance, the occasion incorporates each the debitAmount ($100) and the newTotal of funds ($300). However notice that by offering the computed state (newTotal=$300), it frees the shoppers from computing it themselves, identical to plain previous state occasions. There’s nonetheless an opportunity the buyer will construct a nasty mixture utilizing debitAmount, however that’s on them — you already supplied them with the right computed state.

There’s not a lot level in solely typically together with the present state. Both your shoppers are going to rely upon it on a regular basis (state occasion) or in no way (delta occasion). You might say you wish to scale back the information switch over the wire — high-quality. However the overwhelming majority of the time, we’re solely speaking a couple of handful of bytes, and I encourage you to not fear an excessive amount of about occasion dimension till it prices you adequate cash to hassle addressing. For those who’re REALLY involved, you’ll be able to all the time put money into a claim-check sample.

However let’s transfer on now to our final bad-data-fixing technique.

The Final Resort: Rewind, Rebuild, and Retry

Our final technique is one which you could apply to any subject with dangerous knowledge, be it delta, state, or hybrid. It’s costly and dangerous. It’s a labor-intensive operation that prices lots of people hours. It’s straightforward to screw up, and doing it as soon as will make you by no means wish to do it once more. For those who’re at this level you’ve already needed to rule out our earlier methods.

Let’s simply have a look at two instance situations and the way we might go about fixing the dangerous knowledge.

Rewind, Rebuild, and Retry from an Exterior Supply

On this state of affairs, there’s an exterior supply from which you’ll be able to rebuild your knowledge. For instance, take into account an nginx or gateway server, the place we parse every row of the log into its personal well-defined occasion.

What prompted the dangerous knowledge? We deployed a brand new logging configuration that modified the format of the logs, however we didn’t replace the parser in lockstep (assessments, anybody?). The server log file stays the replayable supply of reality, however all of our derived occasions from a given time limit onwards are malformed and should be repaired.

Resolution

In case your parser/producer is utilizing schemas and knowledge high quality checks, then you could possibly have shunted the dangerous knowledge to a DLQ. You’ll have protected your shoppers from the dangerous knowledge however delayed their progress. Repairing the information on this case is just a matter of updating your parser to accommodate the brand new log format and reprocessing the log information. The parser produces appropriate occasions, adequate schema, and knowledge high quality, and your shoppers can decide up the place they left off (although they nonetheless must deal with the truth that the information is late).

However what occurs should you didn’t shield the shoppers from dangerous knowledge, and so they’ve gone and ingested it? You’ll be able to’t feed them hydrogen peroxide to make them vomit it again up, are you able to?

Let’s verify how we’ve gotten right here earlier than going additional:

- No schemas (in any other case would have failed to provide the occasions)

- No knowledge high quality checks (ditto)

- Knowledge just isn’t compactable and the occasions haven’t any keys

- Customers have gotten into a nasty state due to the dangerous knowledge

At this level, your stream is so contaminated that there’s nothing left to do however purge the entire thing and rebuild it from the unique log information. Your shoppers are additionally in a nasty state, in order that they’re going to want to reset both to the start of time or to a snapshot of inner state and enter offset positions.

Restoring your shoppers from a snapshot or savepoint requires planning forward (prevention, anybody?). Examples embody Flink savepoints, MySQL snapshots, and PostgreSQL snapshots, to call only a few. In both case, you’ll want to make sure that your Kafka shopper offsets are synced up with the snapshot’s state. For Flink, the offsets are saved together with the interior state. With MySQL or PostgreSQL, you’ll must commit and restore the offsets into the database, to align with the interior state. You probably have a distinct knowledge retailer, you’ll have to determine the snapshotting and restores by yourself.

As talked about earlier, it is a very costly and time-consuming decision to your state of affairs, however there’s not a lot else to anticipate should you use no preventative measures and no state-based compaction. You’re simply going to should pay the value.

Rewind, Rebuild, and Retry With the Matter because the Supply

In case your subject is your one and solely supply, then any dangerous knowledge is your fault and your fault alone. In case your occasions have keys and are compactable, then simply publish the nice knowledge over prime of the dangerous. Carried out. However let’s say we will’t compact the information as a result of it doesn’t characterize state? As a substitute, let’s say it represents measurements.

Think about this state of affairs. You could have a customer-facing software that emits measurements of consumer habits to the occasion stream (suppose clickstream analytics). The info is written on to an occasion stream via a gateway, making the occasion stream the only supply of reality. However since you didn’t write assessments nor use a schema, the information has by accident been malformed instantly within the subject. So now what?

Resolution

The one factor you are able to do right here is reprocess the “bad data” subject into a brand new “good data” subject. Simply as when utilizing an exterior supply, you’re going to should establish all the dangerous knowledge, equivalent to by a singular attribute within the malformed knowledge. You’ll must create a brand new subject and a stream processor to transform the dangerous knowledge into good knowledge.

This resolution assumes that all the crucial knowledge is on the market within the occasion. If that’s not the case, then there’s little you are able to do about it. The info is gone. This isn’t CSI:Miami the place you’ll be able to yell, “Enhance!” to magically pull the information out of nowhere.

So let’s assume you’ve fastened the information and pushed it to a brand new subject. Now all it’s essential do is port the producer over, then migrate all the current shoppers. However don’t delete your previous stream but. You could have made a mistake migrating it to the brand new stream and might have to repair it once more.

Migrating shoppers isn’t straightforward. A polytechnical firm could have many various languages, frameworks, and databases in use by their shoppers. Emigrate shoppers, for instance, we usually should:

- Cease every shopper, and reload their inner state from a snapshot made previous to the timestamps of the primary dangerous knowledge.

- That snapshot should align with the offsets of the enter subjects, such that the buyer will course of every occasion precisely as soon as. Not all stream processors can assure this (however it’s one thing that Flink is sweet at, for instance).

- However wait! You created a brand new subject that filtered out dangerous knowledge (or added lacking knowledge). Thus, you’ll must map the offsets from the unique supply subject to the brand new offsets within the new subject.

- Resume processing from the brand new offset mappings, for every shopper.

In case your software doesn’t have a database snapshot, then we should delete all the state of the buyer and rebuild it from the beginning of time. That is solely doable if each enter subject incorporates a full historical past of all deltas. Introduce even only one non-replayable supply and that is now not doable.

Abstract

In Half 1, I lined how we do issues within the batch world, and why that doesn’t switch effectively to the streaming world. Whereas occasion stream processing is much like batch-based processing, there may be vital divergence in methods for dealing with dangerous knowledge.

In batch processing, a nasty dataset (or partition of it) might be edited, corrected, and reprocessed after the actual fact. For instance, if my dangerous knowledge solely affected computations pertaining to 2024–04–22, then I can merely delete that day’s value of knowledge and rebuild it. In batch, no knowledge is immutable, and all the things might be blown away and rebuilt as wanted. Schemas are usually elective, imposed solely after the uncooked knowledge lands within the knowledge lake/warehouse. Testing is sparse, and reprocessing is widespread.

In streaming, knowledge is immutable as soon as written to the stream. The strategies that we will use to take care of dangerous knowledge in streaming differ from these within the batch world.

- The primary one is to stop dangerous knowledge from coming into the stream. Strong unit, integration, and contract testing, express schemas, schema validation, and knowledge high quality checks every play essential roles. Prevention stays some of the cost-effective, environment friendly, and essential methods for coping with dangerous knowledge —to only cease it earlier than it even begins.

- The second is occasion design. Selecting a state-type occasion design permits you to depend on republishing data of the identical key with the up to date knowledge. You’ll be able to arrange your Kafka dealer to compact away previous knowledge, eliminating incorrect, redacted, and deleted knowledge (equivalent to for GDPR and CCPA compliance). State occasions will let you repair the information as soon as, on the supply, and propagate it out to each subscribed shopper with little-to-no additional effort in your half.

- Third and at last is Rewind, Rebuild, and Retry. A labor-intensive intervention, this technique requires you to manually intervene to mitigate the issues of dangerous knowledge. You could pause shoppers and producers, repair and rewrite the information to a brand new stream, after which migrate all events over to the brand new stream. It’s costly and complicated and is greatest prevented if doable.

Prevention and good occasion design will present the majority of the worth for serving to you overcome dangerous knowledge in your occasion streams. Essentially the most profitable streaming organizations I’ve labored with embrace these ideas and have built-in them into their regular event-driven software growth cycle. The least profitable ones haven’t any requirements, no schemas, no testing, and no validation — it’s a wild west, and plenty of a foot is shot.

In any case, when you have any situations or questions on dangerous knowledge and streaming, please attain out to me on LinkedIn. My purpose is to assist tear down misconceptions and tackle considerations round dangerous knowledge and streaming, and that can assist you construct up confidence and good practices to your personal use circumstances. Additionally, be happy to let me know what different subjects you’d like me to put in writing about, I’m all the time open to options.